Xata Agent v0.3.1: Custom tools via MCP, Ollama integration, support for reasoning models & more

Version 0.3.1 of the open-source Xata Agent adds support for custom MCP servers and tools, introduces Ollama as a local LLM provider, and includes support for reasoning models O1 and O4-mini.

Author

Gulcin Yildirim JelinekDate published

We launched our open-source Xata Agent to explore what it means to have an AI-native approach to database observability: one where a smart, extensible agent monitors your PostgreSQL workloads, detects issues, and helps optimize performance using built-in expertise. Since then, we’ve seen a strong response from the community, and it’s clear that agent-based observability resonates.

With the release of v0.3.1, we’re taking another big step forward. This version introduces support for custom MCP servers and tools, adds Ollama as a local LLM provider, and includes reasoning model support for O1 and O4-mini.

Let’s take a closer look at what went into this release but if you'd rather prefer going through the release notes, you can check them out here.

To update the Agent, assuming you are using the provided docker-compose file, simply update the image to xataio/agent:0.3.1 and restart the Agent.

🛠️ Custom tools via MCP

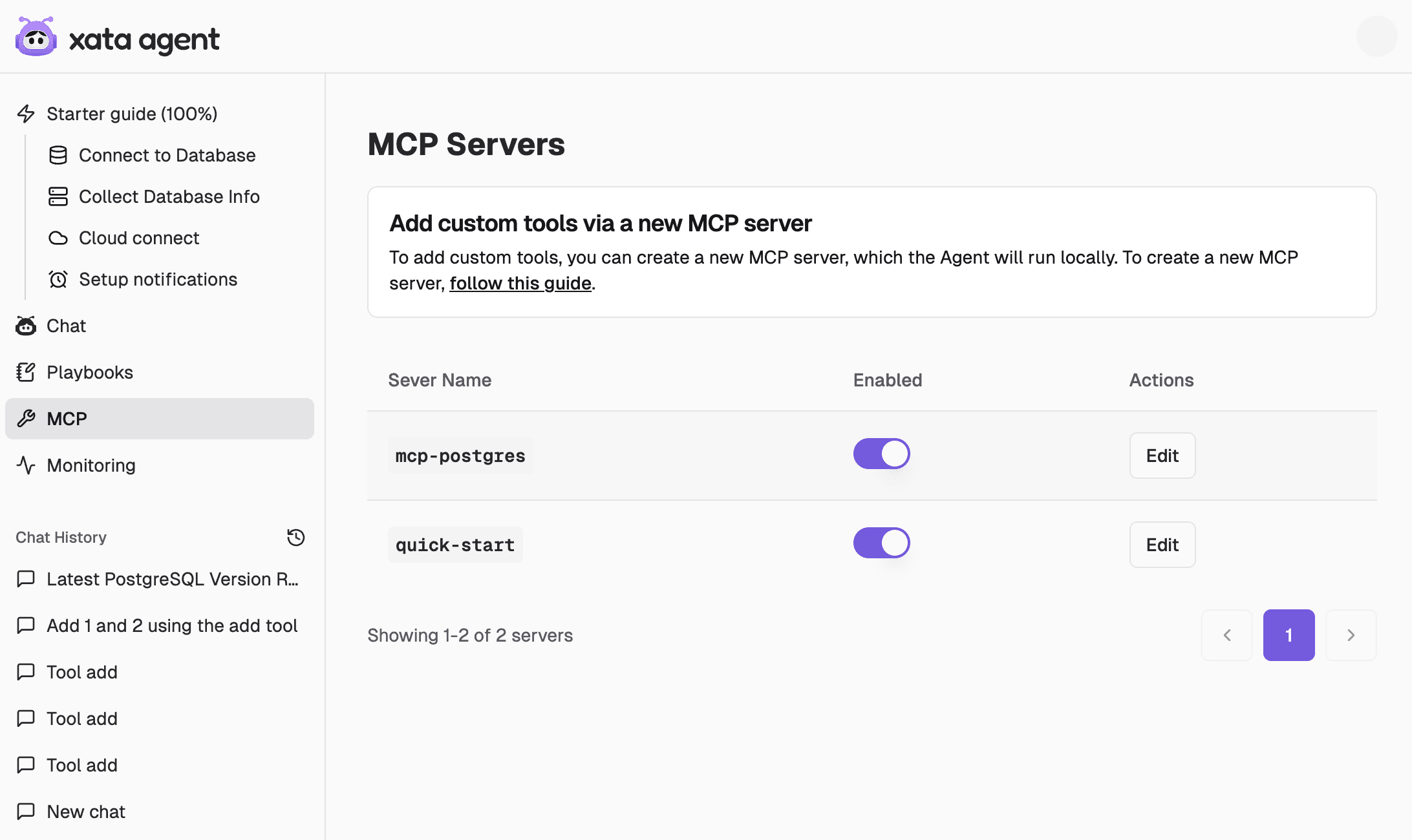

This release introduces a way to extend the Agent with your own tools, in the form of MCP servers. You can, for example, provide the Agent with tools that are specific for your infrastructure or you can use MCP tools created by others. For now, only local MCP servers (stdio protocol) are supported, in the future we want to support also calling MCP servers offered by third parties.

To add a new MCP server, you simply drop the Javascript source code in the mcp-servers folder of the Agent distribution. You can develop the MCP server in TypeScript, and we have a small guide on how to compile the server and install it in the Agent.

The new UI lets you list MCP servers, toggle them on or off, and see which tools are imported from each server.

This set of changes lays the groundwork for more extensible and customizable observability workflows inside the Xata Agent.

🤖 Ollama integration

Another major highlight of this release is support for Ollama, enabling Agent users to run local LLMs for chat and tool execution.

To get started, simply set the environment variable OLLAMA_ENABLED=1. You can also optionally set OLLAMA_HOME if your Ollama installation is in a non-default location. Once enabled, the provider registry will fetch available models from your Ollama server and make them accessible in the Agent chat interface.

By default, Ollama uses a context size of 2048 tokens, which we found to be insufficient for many tasks. In this release, we attempt to read each model’s supported context size and use that instead (falling back to 4096 if it's not found). The temperature for LLM requests is currently hardcoded to 0.1 for stability.

🚧 There are some known limitations:

- Tool support varies by model: Some models hallucinate tool usage or call non-existent tools. In our testing,

qwen 2.5performed best in tool-rich scenarios, but context-heavy interactions can slow it down significantly when running locally. - Streaming limitations: Native streaming is not working reliably across all models. To address this, we simulate streaming by waiting for the full LLM response and then progressively streaming it to the client.

- Model capability filtering: The Ollama JS client does not currently expose all metadata fields from the Ollama API. As a result, we show all models in the available list, though ideally we would filter by capabilities such as

completionandtools.

🧠 Reasoning models

Initial support for reasoning models O1 and O4-mini is now in place. During integration, we noticed that these models currently don’t support tools (or "functions" in OpenAI terminology) with optional parameters. Fortunately, only two of our tools relied on optional parameters, and we didn’t really need that flexibility, so we made them mandatory for now to ensure compatibility. While these models are now available for use, we’re aware that the UI doesn’t yet display intermediary reasoning steps. That’s something we plan to improve in an upcoming release.

Conclusion

Apart from the major features I’ve highlighted in this blog, we’ve also fixed a few bugs and refactored some components. You can find all the details here.

We’re excited to hear what you think of v0.3.1! If you have ideas for new features, model support, or improvements to the Xata Agent, we’d love to hear from you. Feel free to open up an issue, submit a pull request, or drop by our Discord to chat with the team.

Related Posts

Are AI agents the future of observability?

After vibe coding, is vibe observability next?

Xata Agent v0.2.0: Powered-up chat, custom playbooks, GCP support & more

Version 0.2.0 introduces a redesigned chat interface, customizable playbooks, support for monitoring PostgreSQL instances on Google Cloud Platform and various improvements across the board.

From OpenAPI spec to MCP: How we built Xata's MCP server

Learn how we built an OpenAPI-driven MCP server using Kubb, custom code generators, and Vercel’s Next.js MCP adapter.