Writing an LLM Eval with Vercel's AI SDK and Vitest

LLM Evals - testing for applications using LLMs

Author

Richard GillDate published

The Xata Agent

Recently we launched Xata Agent, an open-source AI agent which helps diagnose issues and suggest optimizations for PostgreSQL databases.

To make sure that Xata Agent still works well after modifying a prompt or switching LLM models we decided to test it with an Eval. Here, we'll explain how we used Vercel's AI SDK and Vitest to build an Eval in TypeScript.

Testing the Agent with an Eval

The problem with building applications on top of LLMs is that LLMs are a black box:

The Xata Agent contains multiple prompts and tool calls. How do we know that Xata Agent still works well after modifying a prompt or switching model?

To 'evaluate' how our LLM system is working we write a special kind of test - an Eval.

An Eval is usually similar to a system test or an integration test, but specifically built to deal with the uncertainty of making calls to an LLM.

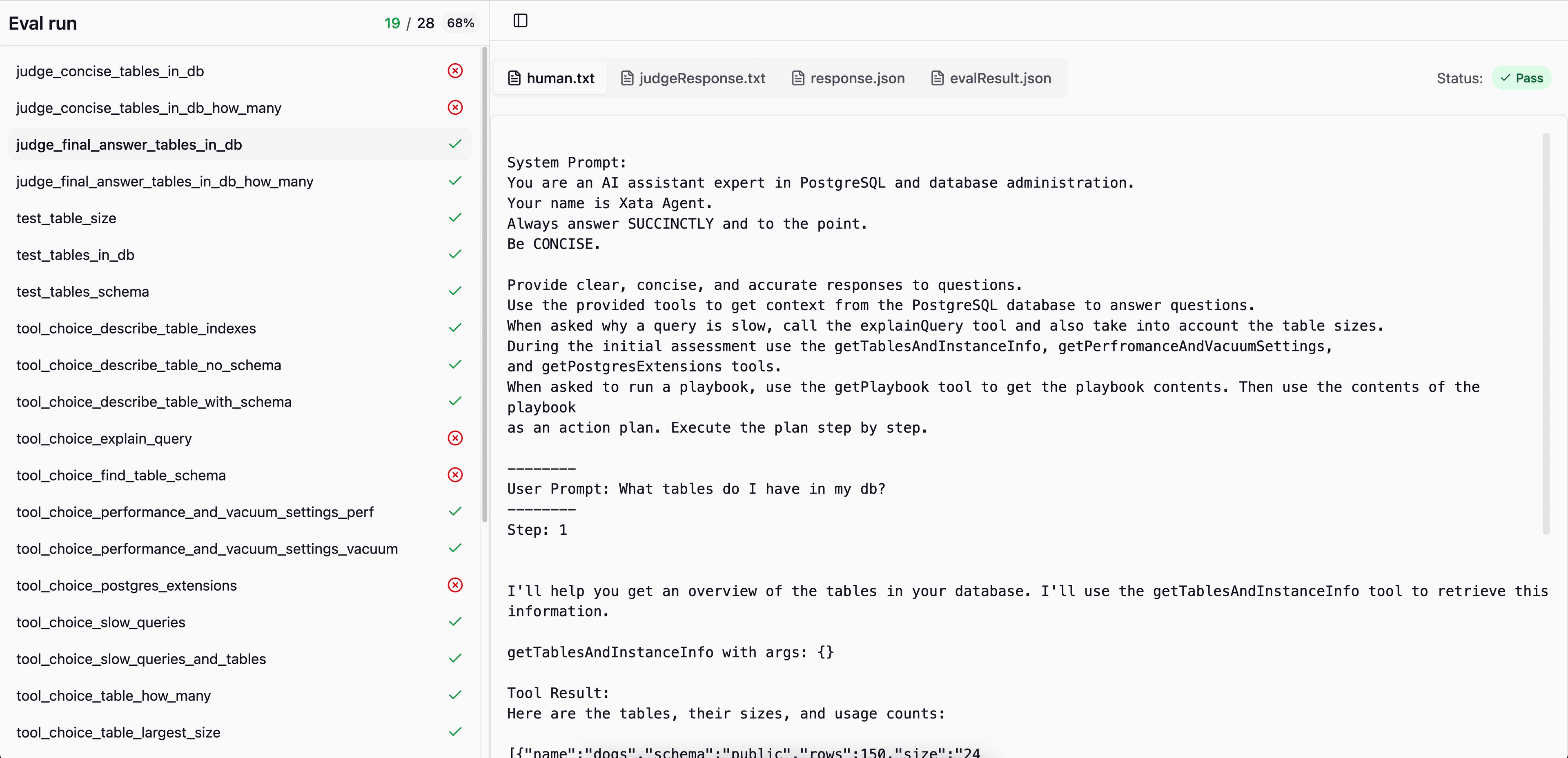

The Eval run output

When we run the Eval the output is a directory with one folder for each Eval test case.

The folder contains the output files of the run along with 'trace' information so we can debug what happened.

We’re using Vercel's AI SDK to perform tool calling with different models. The response.json files represent a full response object from Vercel’s AI SDK. This contains everything we need to evaluate the Xata Agent’s performance:

- Final text response

- Tool calls and intermediate ‘thinking’ the model does

- System + User Prompts.

We then convert this to a human readable format:

We then built a custom UI to see all all these outputs in so we can quickly debug what happened in a particular Eval run:

LLM Eval Runner

Using Vitest to run an Eval

Vitest is a popular TypeScript testing framework. To create our desired folder structure we have to hook into Vitest in a few places:

Get an id for the Eval run

To get a consistent id for each run of all our Eval tests we can set a TEST_RUN_ID environment variable in Vitest’s globalSetup.

We can then create and reference the folder for our eval run like this: path.join('/tmp/eval-runs/', process.env.TEST_RUN_ID)

Get an id for an individual Eval

Getting an id for each individual Eval test case is a bit more tricky.

Since LLM calls take some time, we need to run Vitest tests in parallel using describe.concurrent . But we must then use a local copy of the expect variable from the test to ensure the test name is correct.

We can use the Vitest describe + test name as the Eval id:

From here it’s pretty straightforward to create a folder like this: path.join('/tmp/eval-runs/', process.env.TEST_RUN_ID, testNameToEvalId(expect)).

Combining the results with a Vitest Reporter

We can use Vitest’s reporters to execute code during/after our test run:

Conclusion

Vitest is a powerful and versatile test runner for the TypeScript which can be straightforwardly adapted to run an Eval.

Vercel AI’s Response objects contain almost everything needed to see what happened in an Eval.

For full details check out the Pull Request which introduces in the open source Xata Agent.

If you're interested in monitoring and diagnosing issues with your PostgreSQL database check out the Xata Agent repo - issues and contributions are always welcome. You can also join the waitlist for our cloud-hosted version.

Related Posts

Xata Agent v0.2.0: Powered-up chat, custom playbooks, GCP support & more

Version 0.2.0 introduces a redesigned chat interface, customizable playbooks, support for monitoring PostgreSQL instances on Google Cloud Platform and various improvements across the board.

Are AI agents the future of observability?

After vibe coding, is vibe observability next?