pgroll 0.9.0 update

pgroll v0.9 includes one breaking change, several improvements to table level constraint definitions and better CLI feedback

Author

Andrew FarriesDate published

We've just released v0.9 of pgroll, our open-source schema migration tool for Postgres. As we've done for previous releases, we'll take a look at some of the headline features that made it into the release and look ahead to what's coming next. Past entries in the release blog series can be read here:

For the complete notes for this release see the Github v0.9.0 release page.

What is pgroll?

pgroll is a schema migration tool for Postgres. Designed for application developers working on applications that require frequent schema changes but also need to maintain zero downtime around those schema changes, pgroll takes a different approach compared to most other migration tools on the market. There are two aspects that characterize pgroll's approach to migrations:

- Multi-version migrations: Making a schema change with

pgrollresults in two versions of the schema; the one before the change and the one after the change - this allows applications to select which version of the schema they want to work with and allows side-by-side rollout of applications that require the new schema changes with old applications that may be incompatible with it. - Lock-safe migrations: Migrations using

pgrollare expressed declaratively, rather than using SQL directly. This allowspgrollto implement the steps required to perform the schema change in a safe manner, ensuring that any locks required on the affected objects are held for the shortest possible time.

Full documentation for pgroll is in the documentation section of this site.

pgroll v0.9 includes several new features and one breaking change. Let's take a look at what went into the v0.9 release in more detail:

Breaking change to alter_column operations

pgroll v0.9 includes one breaking change to how columns in tables are renamed. Previously, an alter_column operation was used to rename a column, like this:

The name field of the alter_column operation was used to specify the new name of the column. Here, the role field in the employees table was renamed to job_title.

With pgroll v0.9 this method of renaming a column is no longer supported; the new rename_column operation should be used instead. The same migration is now written:

Expressing column renames in this way makes it easier to combine renames with other operations in the same migration and adheres more closely to SQL where column renames are performed with ALTER TABLE ... RENAME COLUMN statements, not ALTER TABLE ... ALTER COLUMN statements.

Table level constraints

pgroll v0.9 introduces several new options for the create_table operation. It is now possible to create table-level constraints in the operation directly using the new constraints field rather than having to add constraints separately after table creation.

Full documention can be found in the docs for the create_table operation.

We'll look at each supported constraint type in turn:

Primary key constraints

Previously, columns that should be included in a primary key constraint were marked with pk: true in the column definitions themselves:

This way of creating tables with primary key constraints is still the best way to create simple primary key constraints, but the new constraints field offers an alternative way to create more complex primary keys:

Unique constraints

As with primary keys, single column unique constraints can be added when creating a table by setting unique: true in the column definitions themselves:

But by using the constraints field instead, more complex unique constraints are possible:

The constraints field allows us to define unique constraints spanning multiple columns and setting options like included columns.

Check constraints

As with primary keys and unique constraints, single column check constraints on new tables have always been possible to create directly in the definition of each column:

For the creation of more complex check constraints spanning multiple columns or setting constraint options, use the constraints field instead:

Foreign key constraints

As with the other constraints, simple foreign key constraints can be added at table creation time directly in a column definition:

For more complex foreign key constraints including different constraint options, use the constraints field at table level instead:

Exclude constraints

It's also possible to specify exclude constraints using the table-level constraints field:

Exclude constraints were not possible to specify in any version of pgroll before v0.9.

Generated columns

pgroll v0.9 adds support for generated columns in create_table and add_column operations. To create a generated column, use the generated field in a column definition:

The documentation for the create_table operation describes the full set of options that are available for generated columns.

CLI progress updates

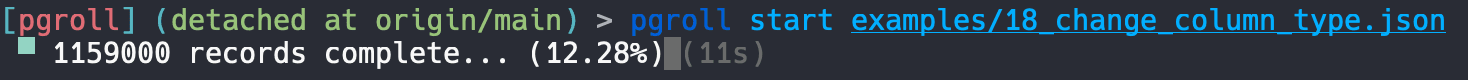

When pgroll modifies a column, for example to add a constraint, the column is duplicated and the constraint is added to the duplicated column. Data is then 'backfilled' into the new column from the old column, performing any data migrations as necessary using the user-supplied up SQL expression from the migration definition. This process is the heart of how pgroll is able to offer two schema versions for every migration.

The backfill process is the most time consuming part of starting a pgroll migration. With pgroll v0.9 the CLI output now gives a better indication of how far the process is from completion:

The CLI now shows how much work remains in the backfill process

Conclusion

pgroll is open-source; check out the repository to get involved.

Many of the features that have gone into this and previous releases have been directly influenced by how users tell us they are using the tool and where it's currently lacking. We welcome any feedback in issues, or contributions via pull requests!

Related Posts

pgroll v0.8.0: Support for multi-column primary keys, multi-column constraints & new CLI commands

Learn about the latest changes in pgroll in the v0.8.0 release as we add new CLI commands, expand what's possible with multi-column keys and constraints and add performance benchmarks.

pgroll 0.10.0 update

pgroll v0.10 includes one breaking change, a new subcommand to convert SQL statements into pgroll operations and improvements to adding default values to existing tables.

pgroll v0.11.0: YAML migration support, pgroll.migration table improvements & more

pgroll v0.11 includes support for YAML migration files, a fix for the bookkeeping of pgroll, and smaller usability improvements.